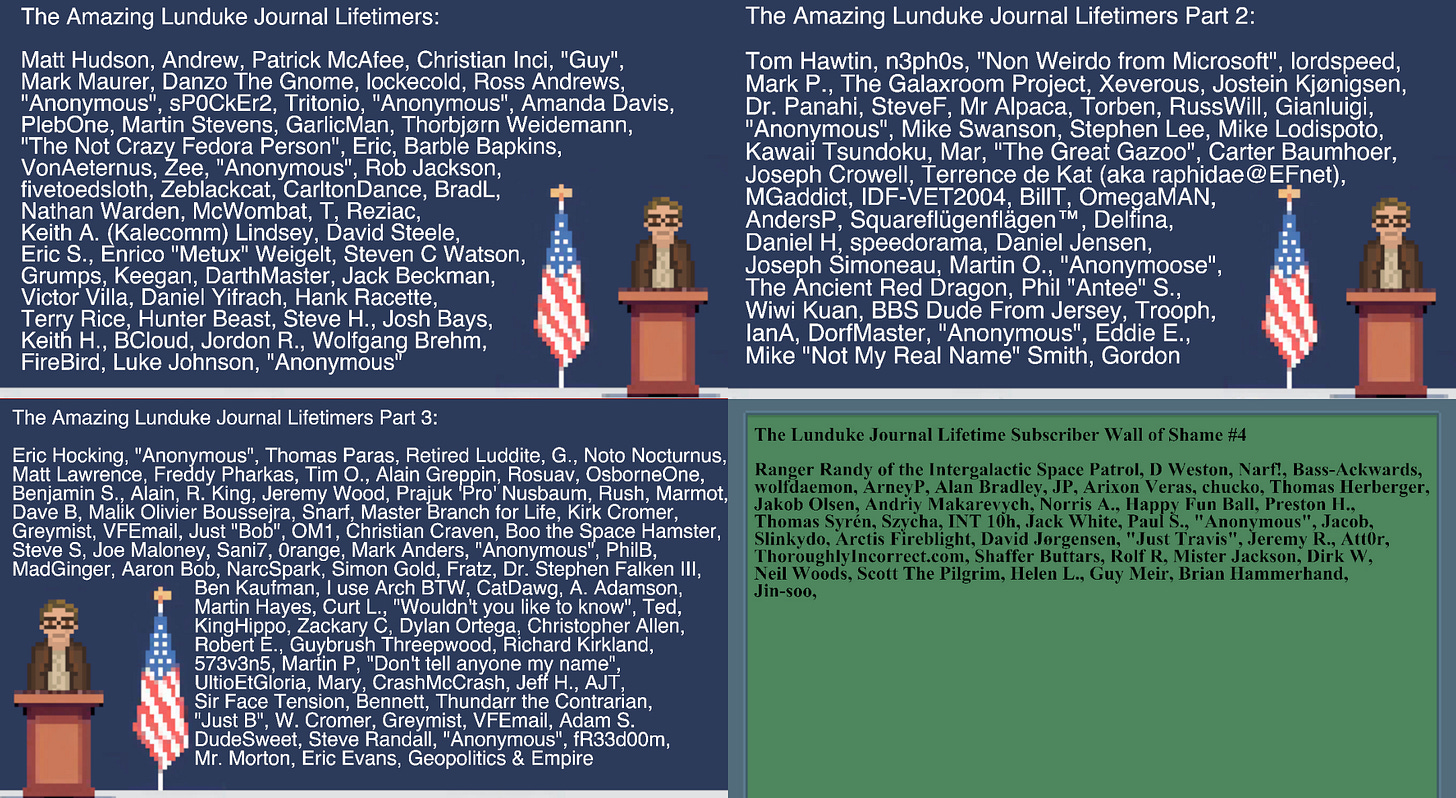

Woo-hoo! The 4th Lunduke Journal Lifetime Subscriber Wall of Shame Awesomeness is almost full!

That means that, within the next day or two, the massively discounted Lifetime Subscriptions will go back to their normal price. So if you wanted to snag the $89 / $99 Lifetime Sub (instead of paying $300), now’s your last chance.

If you are already a Lifetime Subscriber and want to be added to the 4th (or the start of the 5th) wall, email me (bryan at lunduke.com). There are only a couple of spots left on Wall 4.

The new Lifetime Wall designs are locked and loaded, and will make their grand debut at the end of all new shows starting either Friday or Monday.

I also wanted to take a moment to thank all of the non-Lifetime Subscribers. The Lifetime Subs may get a little extra attention at the end of the shows… but every subscriber (Monthly & Yearly) helps to make this work possible.

All of you rule.

-Lunduke