- How many critical software packages are maintained by a small, unpaid team (or, worse, a single person)?

- What happens when that person gets bored with the project... or decides to do something malicious (as in the case with a recent backdoor in the XZ compression tool)... or... gets hit by a bus?

These are not only fair questions to ask... but critical as well.

The reality is that we're not simply talking about a handful of key software packages here -- the entirety of our modern computing infrastructure is built on top of thousands of projects (from software packages to online services) that are built, maintained, and run entirely by one person (or, when we're lucky, 2 or 3 people).

One wrong move and the Jenga tower that is modern computing comes crashing down.

Just to give you an idea of how widespread -- and dire -- this situation truly is, I would like to call your attention to two projects that most people don't even think about... but that are critical to nearly every computer system in use today.

The TZ Database

Dealing with Timezones in software can be tricky. Many rules, many time zone details. As luck would have it, a standard database (TZ Database) was built to make it easier for software projects to get those details right.

And, every time those timezone details (across the world) are changed -- something which can happen several times per year, often with only a few days notice -- that database needs to be updated.

What happens if those details are not updated... if the timezone data is incorrect?

At best? A few minor scheduling inconveniences. At worst? Absolute mayhem... computer-wise. Times can become significantly out of sync between systems. Which can mess up not only scheduling (an obvious issue), but security features as well (as some encryption tools require closely synced time).

To give you an idea of how widespread the TZ Database is, here is just a teeny tiny fraction of the number of software projects which rely upon it:

- Every BSD system: FreeBSD, OpenBSD, Solaris

- macOS & iOS

- Linux

- Android

- Java, PHP, Perl, Ruby, Python, GCC, Javascript

- PostrgreSQL, MongoDB, SQL Server

Yeah. It's basically a list of "all software". And that's just a sample of the software which heavily relies on the TZ Database for making sure timing (and everything that is time-critical) is correct.

Now. With something this absolutely critical, surely a highly paid team of people -- from multiple companies -- is responsible for keeping it updated... right?

Oh, heavens, no.

Two people. Two!

While the database itself has been officially published on ICANN (the "Internet Corporation for Assigned Names and Numbers") servers for the last few years, only 2 people actually maintain the TZ Database.

SQLite

Did you know that SQLite is the most used database system in the entire world? More than MySQL, MS SQL Server, and all the rest of them. Good odds, SQLite is used on more systems than all other database systems in the world... combined.

In fact, SQLite is a critial component in the following systems:

- Android, iOS, macOS, & Windows

- Firefox, Chrome, & Safari

- Most set top boxes and smart TVs

- An absolutely crazy number of individual software packages (from Dropbox to iTunes)

Now, ready for the fact you knew was coming?

SQLite is maintained by... 3 guys.

Not "3 lead developers who oversee an army of open source contributors"... just 3 guys. Total. And they don't accept public patches or fixes.

"SQLite is open-source, meaning that you can make as many copies of it as you want and do whatever you want with those copies, without limitation. But SQLite is not open-contribution."

A piece of software that is practically the cornerstone of modern computing. Trillions of dollars worth of systems relying upon it -- every second of every day. 3 guys.

Corporations rest on the shoulders of... a couple volunteers

Add those two projects together. 5 guys, in total, are responsible for Timezones and SQLite databases. Software and data used on practically every computer on the planet.

And that's just the tip of the iceberg. Critical projects -- often with small teams of (more often than not) unpaid voluneers -- form the core of the vast majority of major software projects. Including commercial ones.

Imagemagick? XZ? FFmpeg?

You'll find those at the heart of more systems than you can count. Good odds you use all three, every day, and don't even notice it.

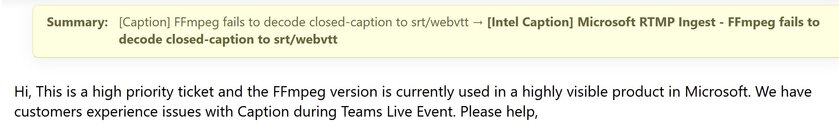

And, as the small team behind FFmpeg pointed out in a recent X post, getting those large corporations to contribute -- in any meaningful way -- can be like pulling teeth:

The xz fiasco has shown how a dependence on unpaid volunteers can cause major problems. Trillion dollar corporations expect free and urgent support from volunteers.

Microsoft / Microsoft Teams posted on a bug tracker full of volunteers that their issue is "high priority"

After politely requesting a support contract from Microsoft for long term maintenance, they offered a one-time payment of a few thousand dollars instead.

This is unacceptable.

We didn't make it up, this is what Microsoft actually did:

https://trac.ffmpeg.org/ticket/10341#comment:4

The lesson from the xz fiasco is that investments in maintenance and sustainability are unsexy and probably won't get a middle manager their promotion but pay off a thousandfold over many years.

But try selling that to a bean counter

In short: Microsoft wanted to benefit from the (free) work done by FFmpeg... but was only willing -- at most -- to toss a few peanuts at the team. And, even then, that (mildly insulting) offer of meager support was only done when Microsoft needed assistance.

A few parting thoughts...

There are valuable lessons to be learned from all of this -- including the need for real, meaningful support (by large corporations) of the projects they rely so heavily upon.

But, for now, I'd like to leave you with a few observations.

- Corporations don't hesitate to throw large sums of money at Tech Trade Organizations (such as The Linux Foundation -- which brings in hundreds of Millions every year from companies like Microsoft)... yet they are hesitant to provide significant funding to projects they rely directly upon to ship their own, often highly profitable, products (see the projects listed earlier in this article).

- How many of these smaller projects -- which Linux desktops and servers rely entirely upon -- receive regular funding from The Linux Foundation (or companies which fund The Linux Foundation)? I'll answer that question for you: Next to none.

- Even high profile Open Source projects -- such as KDE or GNOME -- struggle to bring in enough funding to afford two full time developers on payroll.

- We have avoided catastrophe, thus far, through dumb luck. The recent XZ backdoor, for example, was found by a lone developer who happened to notice a half second slowdown... and happened to have the time (and interest... and experience) to investigate further. The odds of that being discovered before significant harm was done... whew!... slim. So much dumb luck.

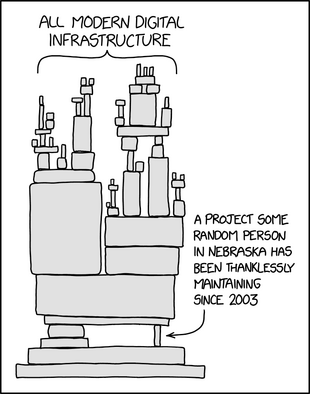

Go take a look at that XKCD comic at the begining of this article again. Funny right? And it makes a solid point.

You know what's terrifying, though? The reality is far more precarious.

There's not simply one project -- by one guy -- holding all of modern computing up.

There's thousands of projects. Each made by one guy. And hundreds of those projects (at least) are load-bearing.

Dumb luck only lasts for so long.